Join now for free!

Join now for free!

MENU

Reviews

Just In

All comparisons

Features

How To

Digital Life

Techno

CNET TV

The shows

The hosts

Airtimes

CNET Studios

Community

About CNET

Feedback

Member Services

Posting

Current poll

Conferences

CNET jobs

Permissions

Resources

Software Central

Glossary

Help

CNET SERVICES

NEWS.COM

COMPUTERS.COM

BUILDER.COM

GAMECENTER.COM

DOWNLOAD.COM

SHAREWARE.COM

BROWSERS.COM

ACTIVEX.COM

SEARCH.COM

MEDIADOME.COM

SNAP! ONLINE

MARKETPLACE

BUYDIRECT.COM

Specials

How to advertise

|

|

|

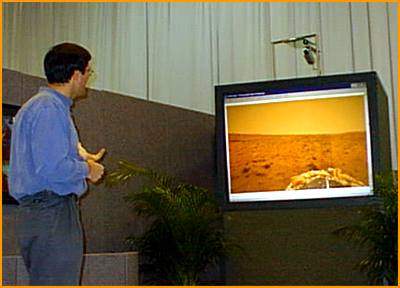

walk on Mars! The Visualization Space computer scans a room to establish a baseline background, then creates outlines of human forms as they move through the space. Using in-depth color analysis, the computer can keep track of hands and limbs. Users can then point at, grab, and move virtual objects without resorting to clunky VR gloves or other pointing devices. Vague phrases like "make it bigger" result in onscreen action, and the application can learn exactly what each user means by every phrase. You can even navigate virtual spaces simply by walking around. Moving to the left or right gives you a different viewing angle, and to move from place to place, you need only point at a spot on the screen and say, "Go there." The demonstration we saw featured a VRML world created with the recent Pathfinder data, allowing the user to "walk" on Mars.

It's cool, but don't expect to see Visualization Space on your desktop

anytime in the next couple of years: even on a quad

Pentium Pro system with half a gigabyte of RAM, the demo ran slowly.

Friday, Nov 21, 1997

|

Back to top

Copyright © 1995-98 CNET, Inc. All rights reserved.